February 11, 2026 — We held a celebration party for the thesis and master's thesis presentations!

February 10, 2026 — Master’s students from our lab presented their thesis research!

February 2, 2026 — Undergraduate students from our lab presented their graduation research!

December 16,23 2025 — We had a year-end party in the laboratory!

December 18, 2025 — Associate Professor Arai appeared at the event and gave a presentation!

December 16, 2025 — A promotional video of our laboratory has been released! (Watch the video) (Watch the short video)

November 19, 2025 — Our laboratory was interviewed for the university's public relations magazine!

September 26, 2025 — The lab held a study camp on machine learning at the TUS Seminar House!

August 5, 2025 — Visited NOVARE, a facility of Shimizu Corporation!

July 4, 2025 — After the graduate school entrance exam, the lab held a celebration gathering!

June 17, 2025 — Participated in a campus introduction video and interview for university promotion!

May 23, 2025 — Participated in a disassembly workshop hosted by Panasonic Holdings Corporation!

April 20, 2025 — Our lab participated in the spring open campus event!

April 1, 2025 — The Arai Lab held a welcome party for new members!

March 18–19, 2025 — 1st master's student Ginga Kennis presented at the 30th Robotics Symposium!

March 18, 2025 — The degree and graduation ceremony was held!

February 6, 2025 — The undergraduate thesis presentations were held!

October 23–24, 2024 — Visited Mt. Mizugaki for a two-day lab trip!

October 19–21, 2024 — Held an intensive camp on Transfer Learning at TUS Seminar House!

September 30, 2024 — Malaysian students visited as part of the Sakura Science Program!

September 7, 2024 — Graduate and undergraduate students presented their research at ROOB 2024!

August 27–30, 2024 — Graduate students presented their research at SICE 2024!

June 7, 2024 — Visited by students from Fujimi Junior and Senior High School!

May 30–31, 2024 — Associate Professor Arai presented at ROBOMECH 2024!

May 30–31, 2024 — First-year master's student Ryosei Kaneko presented at ROBOMECH 2024!

May 30–31, 2024 — First-year master's student Ginga Kennis presented at ROBOMECH 2024!

May 30–31, 2024 — First-year master's student Koki Moriya presented at ROBOMECH 2024!

August 10, 2024 — Join our lab tour at the Summer Open Campus!

May 18–22, 2024 — Held an intensive training camp on Machine Learning at the TUS Seminar House!

May 14, 2024 — Prof. Arai's paper on Active Visual Servoing was accepted in IEEE Access!

April 21, 2024 — Lab tours were held during the Spring Open Campus!

April 21, 2024 — Lab tours to be held during the Spring Open Campus!

March 5–6, 2024 — First-year master's student Kensei Tanaka presented at Robotics Symposium!

March 5–6, 2024 — Fourth-year undergraduate Raito Murakami presented at Robotics Symposium!

February 26, 2024 — Prof. Arai presented at "Productivity Improvement Robot Seminar"!

March 5–6, 2024 — First-year master's student Kensei Tanaka's paper accepted at Robotics Symposium!

March 5–6, 2024 — Fourth-year undergraduate Raito Murakami's paper accepted at Robotics Symposium!

December 6–8, 2023 — Graduation project retreat held at TUS Seminar House!

November 24–25, 2023 — Thank you for visiting our booth at the TUS Festival!

September 26–29, 2023 — Held intensive training camp at TUS Seminar House!

September 6–9, 2023 — First-year master's student Kensei Tanaka presented at SICE 2023!

September 6–9, 2023 — Fourth-year undergraduate Raito Murakami presented at SICE 2023!

September 6–9, 2023 — Fourth-year undergraduate Ginga Kennis presented at SICE 2023!

September 2, 2023 — First-year master's student Kensei Tanaka presented at ROOB 2023!

September 2, 2023 — Fourth-year undergraduate Ryosei Kaneko presented at ROOB 2023!

September 2, 2023 — Fourth-year undergraduate Koki Moriya presented at ROOB 2023!

September 2, 2023 — Fourth-year undergraduate Masaya Hasegawa presented at ROOB 2023!

June 30, 2023 — First-year master's student Shotaro Natsui presented at ROBOMECH 2023!

First-year master's student Takumi Sagano presented at ROBOMECH 2023!

First-year master's student Kensei Tanaka presented at ROBOMECH 2023!

Departmental softball tournament held by the Department of Mechanical and Aerospace Engineering!

Now accepting fourth-year lab tour inquiries! Contact arai.shogo@rs.tus.ac.jp (Lab)

Past Announcements Click here to display past announcements

The Intelligent Robotics Laboratory conducts research and development on robotic systems that address social issues using technologies such as robotics, control theory, machine learning, and image processing. Our work focuses on the key technologies required for robotic manipulation—3D measurement, environment and object recognition, grasp planning, and visual servoing—and their integration into complete robot systems. We are also exploring applications of these technologies in biomedical image analysis and multi-agent systems.

This system automatically removes bolts by equipping a robot arm with a camera, projector, and electric screwdriver, which are controlled through vision-based servoing. As the amount of electronic waste continues to increase, improving recycling efficiency has become essential for achieving SDGs. However, since disassembly targets are diverse and low-volume, conventional teaching-and-playback systems using fixed jigs are unsuitable. Therefore, a universal automatic disassembly system that requires no positioning jig is indispensable. Focusing on bolt removal, we achieved automation through active visual servo control of the screwdriver’s positioning.

This robot system cuts wires by recognizing the wiring and cutting position using a camera and cutting tool mounted on the robot arm. The recycling rate of electronic waste remains below 50%, as disassembly is still largely manual. To accelerate recycling, we need a general-purpose disassembly system that requires no jigs. Our research focuses on wire cutting—since deformable objects such as cables and harnesses lack CAD data and are difficult to recognize, we achieved automation using instance segmentation and keypoint detection.

We study 3D reconstruction methods for scenes involving transparent or reflective objects, which are difficult to measure accurately. Using a hand-eye camera, the system captures images from multiple viewpoints, reconstructs the scene in 3D, and performs picking based on the reconstruction results. Because this method relies only on a monocular camera, it can be implemented at low cost. This research was supported by the JKA Foundation.

This robot system performs bin picking and kitting tasks, which are essential in logistics and manufacturing. It picks up objects piled randomly in a bin and arranges them in a designated location. As e-commerce companies like Amazon and Rakuten pursue warehouse automation, bin-picking robots are becoming indispensable. Similarly, in factories, efficient production under variable demand requires such systems. Our research focuses on key technologies—3D measurement and recognition, grasp planning, motion planning, and active visual servoing—to realize high-performance bin-picking and kitting robots.

Visual servoing refers to the theoretical framework of controlling a robot based on visual information. Just as humans rely on vision to understand and interact with their environment, autonomous robots require visual feedback to perform tasks accurately. We propose a new framework called Active Visual Servoing, which extends conventional methods and enables high-precision manipulation of textureless or reflective objects.

Object regrasping is an essential skill for general-purpose autonomous robots. In this study, we achieved sub-millimeter regrasping accuracy using a dual-arm robot by applying our Active Visual Servoing technique, enabling fast and precise handover between arms.

For autonomous robots, 3D measurement is a fundamental capability, much like human binocular vision. Although various sensors exist, measuring metallic or transparent objects remains difficult. We aim to develop a versatile 3D measurement device capable of accurate and inexpensive sensing for objects with diverse optical properties. Incorporating mathematical approaches such as sparse modeling and deep learning, we pursue both theoretical innovation and practical implementation for industrial use.

The number of collaborative robots working alongside humans is increasing yearly. To operate efficiently, such robots must predict human movements and act autonomously to shorten task times while ensuring safety and comfort. Our research aims to realize these systems by studying human motion prediction and autonomous motion planning for collaborative robots.

To effectively combat cancer and infectious diseases, it is essential to understand the control mechanisms underlying various biological phenomena. This study targets the fruit fly, one of the most commonly used model organisms, and proposes image-based identification and tracking algorithms. More than 70% of human disease-related genes exist in Drosophila. Using our method, we clarified the function of taste sensory neurons located in the fly’s legs. Part of this research was published in Nature Communications. By applying this technique, large-scale drug screening using genetically modified Drosophila can be achieved, significantly accelerating the development of new medicines.

The Arai Lab introduction video is now available. It features students sharing their genuine perspectives—from why they chose the lab and the appeal of the research to daily activities and future career paths. Please take a look.

The Tosa Brothers introduce the ramen-making robot that actually operated at the Rikkyo Festival, along with research conducted at the Arai Laboratory, in an interview format. Please take a look.

This video introduces the appeal of Tokyo University of Science's Noda Campus, presented by current students. If you'd like to get a feel for the university's atmosphere, please take a look. It also briefly shows scenes from the Arai Laboratory during the 2025 Open Campus event.

You can watch a short video of the ramen automatic cooking robot. It was actually exhibited at the University Festival.

An introduction video of the Arai Laboratory has been released. It is summarized briefly in a short format. Please take a look.

2nd Year Master's Student

2nd Year Master's Student

1st Year Master's Student

1st Year Master's Student

1st Year Master's Student

1st Year Master's Student

1st Year Master's Student

4th Year Undergraduate Student

4th Year Undergraduate Student

4th Year Undergraduate Student

4th Year Undergraduate Student

4th Year Undergraduate Student

4th Year Undergraduate Student

4th Year Undergraduate Student

4th Year Undergraduate Student

Affiliation: Associate Professor, Department of Mechanical and Aerospace Engineering, Faculty of Science and Technology, Tokyo University of Science

Degree: Ph.D. in Information Science (March 2010, Tohoku University)

Professional Experience:

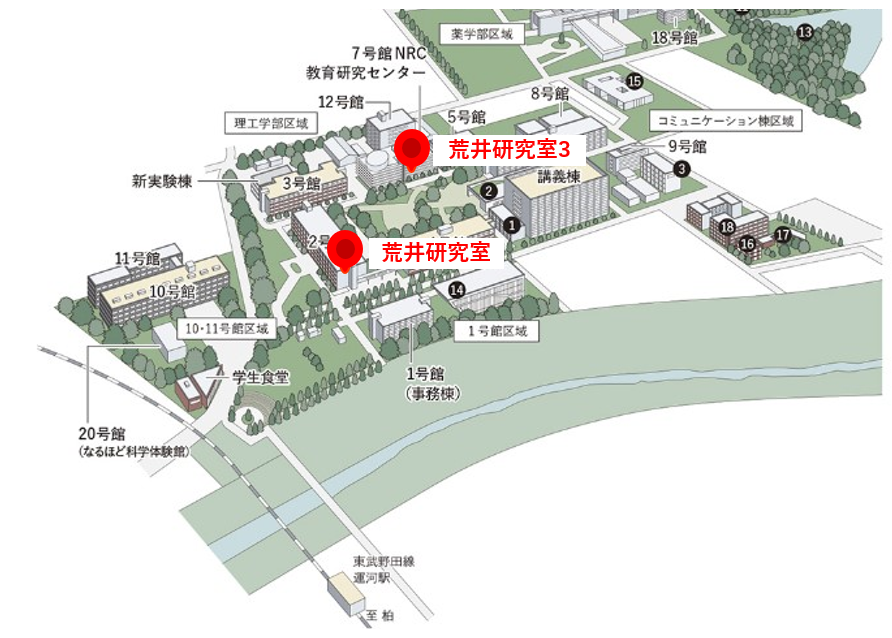

2nd Building, 1st Floor, Arai Lab, Noda Campus, Tokyo University of Science, Yamasaki 2641, Noda City, Chiba Prefecture

7th Building, 3rd Floor, Arai Lab 3, Noda Campus, Tokyo University of Science, Yamasaki 2641, Noda City, Chiba Prefecture

04-7124-1501 (ext. 3925)

arai.shogo@rs.tus.ac.jp

2nd Building, 1st Floor, Intelligent Robotics Lab (Arai Lab), Noda Campus, Tokyo University of Science, Yamasaki 2641, Noda City, Chiba Prefecture

7th Building, 3rd Floor, Arai Lab 3, Noda Campus, Tokyo University of Science, Yamasaki 2641, Noda City, Chiba Prefecture